Artificial Intelligence (AI) is transforming the field of Materials Science, and predictive AI models are playing a significant role in this transformation. These models can provide valuable insights into the behavior and properties of materials, leading to the discovery and optimization of new and existing materials. In this blog post, we will explore the various ways in which predictive AI models are being used in Materials Science and the impact they are having.

Supervised learning is a type of machine learning (ML) in which the algorithm is trained on a labeled dataset. The goal of supervised learning is to predict the correct output based on the input. This is important because it allows the algorithm to learn from past examples and make predictions about future data that it has not seen before.

One of the most exciting applications of predictive AI models in Materials Science is in the discovery of new materials, which was the topic of a previous post. With the vast amount of data generated in the field, AI algorithms can help researchers identify patterns and relationships between the properties of materials and their performance in various applications. This information can then be used to guide the synthesis of new materials with desired properties. For example, AI algorithms can analyze large datasets of materials’ properties and performance in energy storage applications, such as batteries, to identify correlations between properties and performance.

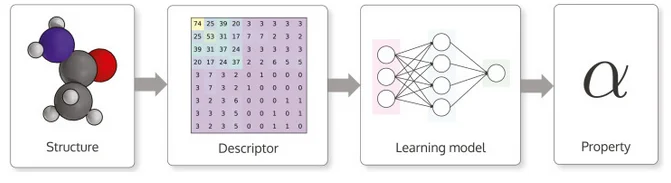

Within this area of work, there is a wide variety of learning models ranging from the most rudimentary to the most modern and all have their field of application depending on the size and characteristics of the dataset. In addition, it is essential to choose a material representation that is well suited to the learning model to be used, and, as we recently reviewed, there is a very diverse array of data types utilized to represent materials as inputs for Artificial Intelligence. Knowing that, it comes as no surprise that literature houses a broad spectrum of combinations of material representations and learning models to predict their properties. A few examples include:

- Ja et al. (2018) developed an AI model (a deep neural network called ElemNet) that predicts with high precision the formation enthalpy of materials taking into account only composition-based 1D vectors.

- Himanen et al. (2020) achieved remarkable results in predicting the formation energy of materials using SOAP and ACSF local atomic fingerprints (along with other descriptors) as inputs of a kernel ridge regressor (KRR), that is a very popular traditional ML model.

- Xie & Grossman (2018) proposed an AI model (crystal graph convolutional neural network, CGCNN) for predicting material properties directly from the connections of atoms in a crystal structure represented in the form of a graph. This approach provides a universal and interpretable representation with accurate predictions for various crystal types and compositions.

Predictive models are basically a good fit between a material representation and a learning model (Himanen et al., 2020)

In conclusion, predictive AI models are having a major impact in the field of Materials Science. With the help of supervised learning, these models can learn from past examples and make predictions about future data, leading to the discovery and optimization of new and existing materials. From identifying correlations between properties and performance to discovering new materials with desired properties, the applications of predictive AI models in Materials Science are vast and exciting. With the advancements in materials’ representation and the development of ever more diverse and useful learning architectures, the potential for further breakthroughs in the field is immense.